I recently found myself in a deep state of flow while coding — the kind where time melts away and you gain real clarity about the software you’re building. The difference this time: I was using Claude Code primarily.

If my recent posts are any indication, I’ve been experimenting a lot with AI coding — not just with toy side projects, but high-stakes production code for my day job.

I have a flow that I think works pretty well. I’m documenting it here as a way to hone my own process and, hopefully, benefit others as well.

Many of my friends and colleagues are understandably skeptical about AI’s role in development. That’s ok. That’s actually good. We should be skeptical of anything that’s upending our field with such ferociousness. We just shouldn’t be cynical.1

Step 0: Set the stage #

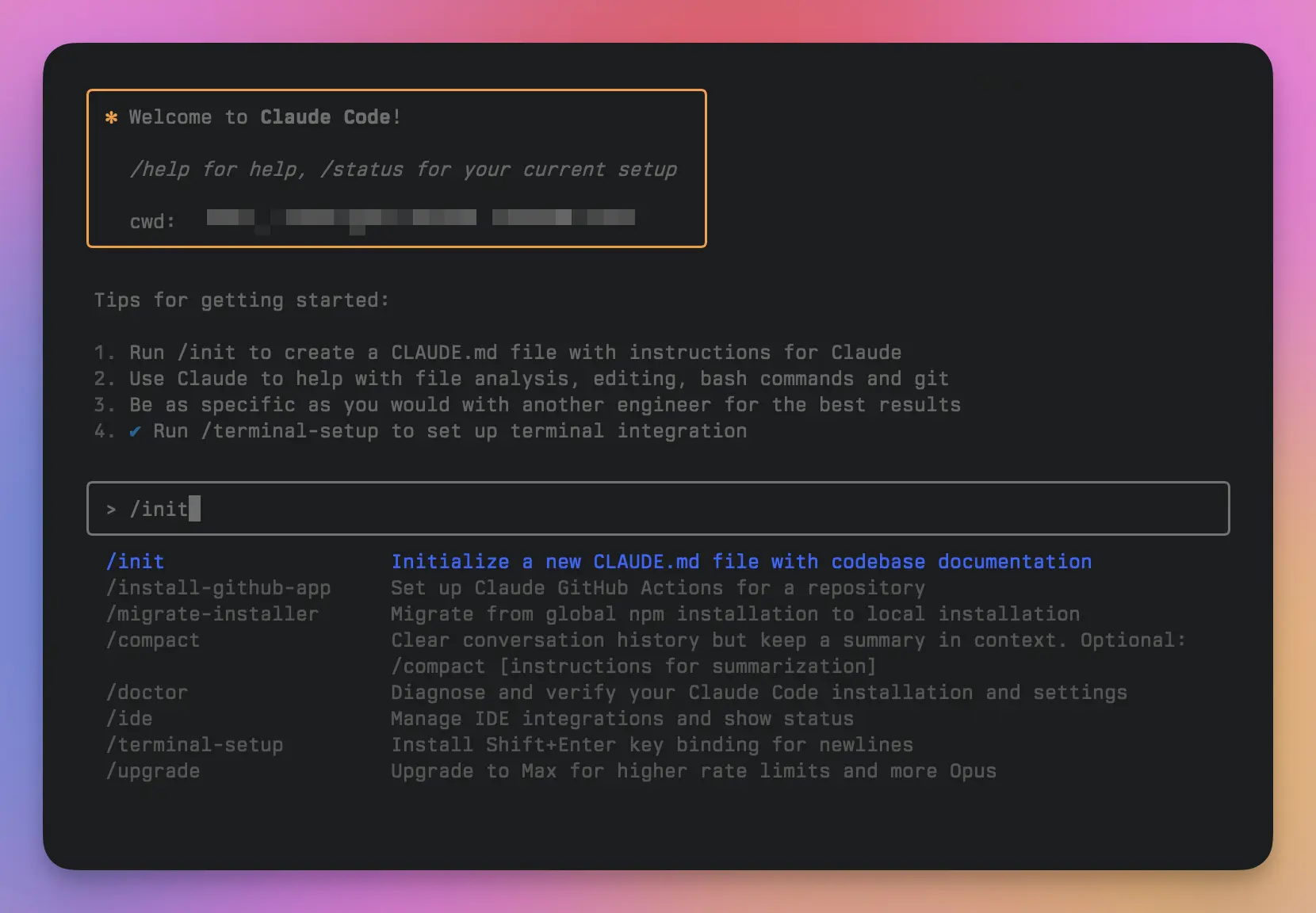

You know what’ll definitely get you out of the flow? Having to constantly repeat basic instructions to your agent.

"This is an Android app that does X, using the Y architecture…"

"When styling the front end web client, only use tailwind css v4…"

"Use `make test` to run a single test; `make tests` to run the entire test suite…"

"To build the app without running lint use `make`…"

…

These are all very important instructions that you shouldn’t have to repeat to your agent every single time. Invest a little upfront in your master ai instructions file. It makes a big difference and gets you up and coding quickly with any agent.

Of course, I also recommend consolidating your ai instructions to a single source of truth, so you’re not locked in with any single vendor.

Step 1: Plan with the Agent #

Checkout my post ExecPlans. I now use OpenAI’s Aaron Friel ExecPlans approach for this.

I won’t lie. The idea of this step did not exactly spark joy for me. There are times where I plan my code out in a neat list, but rarely. More often than not, I peruse the code, formulate the plan in my head and let the code guide me. Yeah, that didn’t work at all with the agents.

Two phrases you’ll hear thrown around a lot: “Context is king” and “Garbage in, garbage out.” This planning phase is how you provide high-quality context to the agent and make sure it doesn’t spit garbage out.

After I got over my unease and explicitly spent time on this step, I started seeing dramatically better results. Importantly, it also allows you to pause and have the agent pick up the work at any later point of your session, without missing a beat. You might be in the flow, but if your agent runs out of tokens, it will lose its flow.

Here’s my process:

- All my plans live in an

.ai/plans/folder. I treat them as fungible; as soon as a task is done, the plan is deleted. - Say I’m working on a ticket

JIRA-1234and intend to implement it with a stack2 of 4 PRs. - Each of those PRs should have a corresponding one plan file:

JIRA-1234-1.md,JIRA-1234-2.md, etc. - The plan (and PR) should be discrete and implement one aspect of the feature or ticket.

- Of course, I make the agent write all the plans and store it in the folder. (I came this far in my career without being so studious about jira tasks and stories; I wasn’t about to start now).

This is the exact prompt I use:

You are an Expert Task Decomposer. Your entire purpose is to break down complex goals, problems, or ideas into simple, clear, and actionable tasks. You will not answer my request directly; you will instead convert it into one or more execution plans.

You **MUST** follow this three-step interactive process without deviation:

**Step 1: Propose a Task List.**

First, analyze my request and generate a concise, numbered list of the task titles you propose to create. Your first response to me must ONLY be this list. Do NOT write the full plans yet.

For example, if my request is "I want to create a simple blog," your first response should be something like:

"Understood. I propose the following tasks:

1. Choose and Purchase a Domain Name

2. Set Up Web Hosting

3. Install and Configure Content Management System (CMS)

4. Design and Customize Blog Theme

5. Write and Publish First Three Blog Posts"

**Step 2: Await User Approval.**

After presenting the list, **STOP**. Wait for my explicit confirmation. I will respond with something like "Proceed," "Yes," or request modifications. Do not proceed until you receive my approval.

**Step 3: Get Filename Format & Generate Plans.**

Once I approve the list, you will ask me for the desired filename format. For example: "What filename format would you like? (e.g., `<YYYY-MM-DD>-<TaskName>.md`, `Task-<ID>.txt`)"

After I provide the format, you will generate the full, detailed execution plan for EACH approved task. Each plan must be created in a separate, single file, adhering strictly to the `## OUTPUT FORMAT` specified below.

The files should be created in a @.ai/plans folder. If this folder doesn't exist, ask the user where they need to create it.

---

## OUTPUT FORMAT

Use the following markdown template for every plan you generate:

# Task: [A concise name for this specific, atomic task]

**Problem:** [Briefly explain what this specific task is solving or achieving.]

**Dependencies:** [List any other tasks that must be completed first. Write "None" if there are no dependencies.]

**Plan:**

1. [Clear, explicit Step 1 of the plan]

2. [Clear, explicit Step 2 of the plan]

3. ...

**Success Criteria:** [A simple checklist or a clear statement defining what "done" looks like for this specific task.]

---

## CRITICAL RULES

- **Atomicity:** Each task must be a single, focused unit of work. If a step in a plan feels too large, it should likely be its own task. Err on the side of creating more, smaller tasks rather than fewer, complex ones.

- **Clarity:** Write instructions that are explicit, unambiguous, and can be executed by someone without needing any additional context.

- **Strict Adherence:** The interactive workflow is not optional. Always propose the task list first and await my approval before asking for the filename and generating the plans.

Begin now. My first request is:

I have this prompt saved as a “plan-tasks.md” command in my .ai/commands folder so I don’t have to type this all the time.

What follows now is a clean back-and-forth conversation with the agent that will culminate with the agent writing down the task plan. Sometimes I may not understand or agree with the task or sequencing, so I’ll ask for an explanation and adapt the plan. Other times I’m certain a task isn’t needed and I’ll ask for it to be removed.

This is the most crucial step in the entire process.

If the future of software engineering is going to be AI reliant, I believe this planning step is what will distinguish the senior engineers from the junior ones. This is where your experience shines: you can spot the necessary tasks, question the unnecessary ones, challenge the agent’s assumptions, and ultimately shape a plan that is both robust and efficient.

I intently prune these tasks and want them to look as close to the sequence I would actually follow myself. To reiterate: the key here is to ask for the plan to be written so that another agent can execute it autonomously.

Step 2: The Fun Part - release the Agents! #

After committing all the plans (in your first PR), the real fun begins and we implement JIRA-1234-1.md.

You would think my process now is to spawn multiple agents in parallel and ask them to go execute the other plans as well. Maybe one day, but I’m not there yet. Instead, I start to parallelize the work around a single task.

Taking JIRA-1234-1.md as an example, I’ll spawn three agents simultaneously:

- Agent 1: The Implementer. Executes the core logic laid out in the plan.

- Agent 2: The Tester. Writes meaningful tests for the functionality, assuming the implementation will be completed correctly.

- Agent 3: The Documenter. Updates my project documentation (typically in

.ai/docs) with the task being executed.

I want you to execute the plan I provide by spawning multiple agents.

But before you start the work:

- Go through the plan, analyze it and see how it applies to the project.

- Come up with your execution steps

- Confirm the strategy with me

- After i confirm, start execution.

Three agents need to do the work.

1. **Agent 1: The Implementer.** Executes the core logic laid out in the plan.

2. **Agent 2: The Tester.** Writes meaningful tests for the functionality, assuming the implementation will be completed correctly.

3. **Agent 3: The Documenter.** Updates my project documentation (see /my-feature/README.md in `.ai/docs`) with the new feature being executed.

Assume the other agent will complete the work successfully.

Notice that with this approach, the likelihood of the agents causing merge conflicts is incredibly slim. Yet, all three tasks are crucial for delivering high-quality software.

This is typically when I’ll go grab a quick ☕. When I’m back, I’ll tab through the agents, but I spend most of my time with the implementer. As code is being written, I’ll pop open my IDE and review it, improving my own understanding of the surrounding codebase.

Sometimes I might realize there’s a better way to structure something, or a pattern I’d missed previously. I don’t hesitate to stop the agents, refine the plan, and set them off again.

Eventually, the agents complete their work. I commit the code as a checkpoint (I’m liberal with my git commits and use them as checkpoints).

Step 3: Verify and Refactor #

I run the tests, and more often than not, something usually fails. This isn’t a setback; it’s part of the process. I analyze whether the code was wrong or the test was incomplete, switch to that context, and fix it.

This is also where I get aggressive with refactoring. It’s a painful but necessary habit for keeping the codebase clean. I still lead the strategy, devising the plan myself, and then direct the agent to execute it or validate my approach. While it usually agrees (which isn’t always comforting 🙄), it can sometimes spot details I might have otherwise missed.

Once I’m satisfied and the checks pass, I do a final commit and push up a draft PR.

Step 4: The Final Review #

I review the code again on GitHub, just as I would for any other PR. Seeing the diff in a different context often helps me spot mistakes I might have missed in the IDE. If it’s a small change, I’ll make it myself. For anything larger, I’ll head back to the agents.

All the while, I’m very much in the flow. I may be writing less of the boilerplate code, but I’m still doing a lot of “code thinking”. I’m also not “vibe coding” by any stretch. If anything, I’m spending more time thinking about different approaches to the same problem and working to find a better solution. In this way, I’m producing better code.

I’ve been building like this for the past few weeks and it’s been miraculously effective. Deep work beats busywork, every time. Let agents handle the gruntwork, so you can stay locked in on what matters most.

lest we get swept by this tide vs surfing smoothly over it ↩︎

I absolutely love deconstructing a feature into multiple stacked PRs. ↩︎

Discuss this on Hacker News